Ultra-Low Latency live video streaming

Reducing latency for live video streams delivered using HTTP adaptive bit rate (ABR) technology has become a top priority for service providers that are seeking to deliver a better multi-screen viewing experience to consumers.

Latency is characterized as the delay between the time an event actually occurs (real-time) and the time the event is viewed by a consumer on their screen. With legacy broadcast TV signals, latency is approximately 5 seconds. With the introduction of traditional IPTV services a decade ago, latency rose to around 10 seconds. Today’s over-the-top (OTT) implementations of HTTP ABR technology can exhibit latency of 30 seconds or more for live content. Latency reduction requires a holistic approach to identifying and addressing sources of delay within the video streaming workflow. With the right approach, service providers can reduce latency for OTT streams to broadcast levels or better.

The State of Live Video Consumption

Against a backdrop of rapid transformation in the video services market, one factor remains the same - people love to watch live video programming. While consumer behaviors are shifting toward online and mobile viewing versus TV, consumer interest in sports and news content remains strong. Live events like FIFA’s World Cup are a great example. The 2018 World Cup final between France and Croatia attracted a combined global audience of 1.12 billion and more than 78 percent of viewers (884 million people) watched the event live. Live services are especially important to pay TV operators facing increased competition for subscribers from online services. According to a study published by Altman Vilandrie & Company, 52 percent of all pay TV customers indicated that live sports was a top reason for subscribing to Pay TV and 63 percent of all viewers identified news content as a main reason to subscribe. Needless to say, high quality live video services remain a key part of the value proposition consumers have come to expect from their video service provider.

Why is Latency Important?

The truth is that latency is only important under certain circumstances. Delays may go unnoticed by consumers when viewing live programming in isolation, as they have no frame of reference for what constitutes live and will not recognize that their program is delayed. However, when viewers are in close proximity with other screens that have a lower latency signature, or when information about the event is available through real-time services like social media, the delay becomes very noticeable. A great example is the person watching a football game on their tablet at home in their apartment. If their neighbor next door is watching the same game via broadcast TV, latency will become obvious when loud cheers are heard through the wall a full 25 seconds before the same scene reaches the tablet. This delay can be frustrating and is sometimes enough to cause a subscriber to switch providers. Consumers want their live streaming services to be truly live, with minimal delay. According to market research conducted by Limelight Networks, 59.5 percent of people around the world said they would be more likely to watch live sports online if the stream was not delayed from the live broadcast. Fortunately, there are solutions available to help video service providers reduce stream latency.

Webinar on-demand

Preparing CDNs for a surge in live sports

Understanding HTTP ABR Latency

HTTP adaptive bit rate (ABR) delivery is the predominant method of streaming video to smart TVs, tablets, PCs, mobile phones, and other devices. With their ability to support delivery over unmanaged networks, HTTP ABR content formats and streaming protocols have helped fuel the rise of online video viewership. While ABR technology has proven to be extremely successful in expanding the reach of video services to new devices, latency for live streaming has emerged as a major concern affecting consumer’s quality of experience.

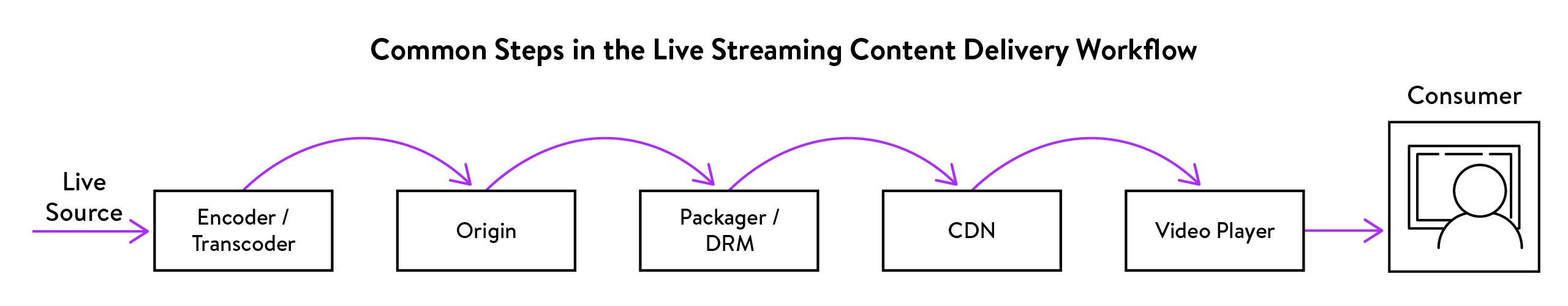

ABR stream latency is a product of how content is encoded, processed, and exchanged between system components in a typical content distribution workflow. Commonly, the smallest addressable element of an encoded ABR file is a segment. A segment is a time slice of content, typically 2 to 10 seconds in duration, that contains video encoded at multiple bit rates. A single TV show consists of thousands of these segments that, when delivered and processed in sequence, present one contiguous video stream. In order to fulfill new stream requests, video players pull content through the distribution network segment by segment. To get there, content must traverse a series of network elements that includes encoders, origin servers, and CDN caches to reach client video players. Each element in the chain introduces some latency.

Common sources of HTTP ABR stream latency include:

- Buffering

Various elements in the content distribution workflow use buffers that temporarily store data in physical memory as it is relayed between system components. Video players, as an example, will often store several full segments in memory before they begin playback. While they are a major source of latency, buffers also play an important role in improving the viewing experience for streamed video. Without buffering, any short drops in network bandwidth would instantly cause a stall in video playback. Buffering extends the time required for workflow components to receive new data from upstream systems, introducing a margin safety that allows for temporary fluctuations in network bandwidth. This results in smoother video playback, with fewer stalls. - Data communication

The methods used to exchange data between network elements can also be a source of delay. With standard ABR distribution, segments are discrete and are not transmitted downstream until a full segment is complete. Delays are introduced as each component in the chain waits for full segments to be built, transmitted, and received before proceeding to the next steps. - Processing

Additional delays can be created by video processing activities that occur during the content distribution process. This can include activities such as transcoding, packaging, or content encryption. - ABR Segment Size

ABR segment size is directly linked to buffering, communication, and processing delays. Longer segment durations increase buffer sizes and extend the time it takes to process and relay segments. Shorter segment durations have the opposite effect, reducing overall stream latency.

Introducing Ultra-Low Latency CMAF

In early 2016, Apple and Microsoft announced that they would be collaborating on a new standard to reduce the complexity of delivering online video to multiple devices. The primary goal was to address the problem of ABR content format fragmentation. It had become common practice for video service operators to host multiple versions of the same content on their CDNs to ensure compatibility with a full range of consumer devices. For example, an MPEG-DASH encoded version and an Apple HLS version of the same movie asset would be stored on the CDN, knowing that some devices would only support one these two formats. Video service operators and CDN providers complained that this raised storage costs and reduced CDN efficiency, leading Apple and Microsoft to act. In early 2018, a new standard, called the common media application format (CMAF), was officially published. Rather than defining a new format like MPEG-DASH and HLS, CMAF defined a new container standard that builds on the capabilities of these content formats. CMAF provided a way to improve CDN and storage efficiency by defining a new standard that enabled a single content asset to be played by any client. In addition to reducing format fragmentation, CMAF also introduced a special low latency mode that could be used to reduce lag times for live video streams. With updates to each component in the content distribution workflow, CMAF provides a way to reduce lag times to a few seconds or less.

Chunked Encoding and Chunked Transfer Encoding

CMAF’s ultra-low latency mode introduces a concept call chunked encoding. With CMAF, shorter duration “chunks” become the smallest referenceable element of an encoded video file. Chunk size is configurable and can be as small as 1 second or less in duration. These chunks can be combined to form a “fragment” and these fragments can be joined to form a “segment”.

Individual chunks can be transferred while they are being encoded using chunked transfer encoding. Chunked transfer encoding is a data transfer protocol for relaying CMAF chunks through the content delivery workflow via HTTP. Using chunked transfer encoding, communication of CMAF chunks can flow between the encoder, origin, CDN cache, and video player without knowing the final size of the object that is being sent. This means chunks can be transferred before their parent segments are fully built, eliminating potential sources of delay throughout the network.

Lowering Latency with CMAF

It is important to note that encoding content using CMAF containers does not lower latency by itself. Each component in the content delivery chain must be optimized for chunked encoding and chunked transfer encoding. Additionally, careful thought must be placed on how to tune the implementation to find the right balance between latency improvements and stream continuity. As buffer durations get progressively smaller, tolerance for network bandwidth fluctuations will decrease. If the underlying network delivers very consistent performance, as is often the case with carrier’s high-speed data networks, the end-to-end workflow can be tuned to support extremely low latencies (2-3 seconds). For mobile networks where a higher degree of bandwidth variability is to be expected, tuning will be more conservative and latency slightly higher. In summary, latency improvements are achieved by applying optimizations to content workflow elements, not implementing the format itself. Working with the right partners to dial in the best result is critical to having a low latency service that is also reliable. Done right, latency performance should be well within broadcast standards.

In Conclusion

Live video services remain a key competitive differentiator for Pay TV services. Reducing latency is critical to improving the quality of these services and keeping consumers satisfied. Implementing support for ULL-CMAF and performing optimizations throughout the content delivery workflow can bring latency for OTT delivered content to broadcast levels or better, eliminating a potential source of subscriber frustration. It is critical that operators work with an experienced partner who can configure and tune the system to account for specific network environments and unique attributes of the end-to-end content delivery architecture. With the right advice and expertise, operators can be assured that they are delivering the best live viewing experience to every consumer.